Why Nvidia Is Cheaper Than the Market

Nvidia dominates AI. So why are investors suddenly unwilling to pay up?

The world’s largest company is an underdog.

That sounds ridiculous at first glance. Nvidia is worth roughly $4.4 trillion, after all. But despite that size and dominance, the stock is cheaper than the market.

Based on 2027 earnings estimates, Nvidia trades at about 18x earnings. The S&P 500 trades closer to 19x. In other words, Nvidia now carries a lower valuation than the stock market as a whole, which is something we haven’t really seen since around 2015.

So why are investors suddenly so skeptical of the company?

The Memory Bottleneck

The first reason has to do with memory.

Nvidia is known for its GPUs, the chips that do the heavy number-crunching for AI. But what the company actually sells to data centers are AI accelerators, which combine those GPUs with large stacks of high-bandwidth memory, or HBM.

HBM is critical because it feeds data to the GPU fast enough that the chip doesn’t sit around waiting. Without it, all that compute power is wasted.

Right now, HBM is a massive bottleneck. The major memory suppliers, including SK Hynix, Samsung, and Micron, are effectively sold out of HBM capacity through this year. As a result, prices have surged.

For Nvidia, that creates two problems.

First, it caps how many accelerators the company can ship. Even if demand is enormous, Nvidia simply can’t sell more units if there isn’t enough memory available to bundle with its GPUs.

Second, it pushes costs higher. Nvidia has a lot of pricing power and can pass some of those increases on to customers. But if it can’t pass through all of them, margins could take a hit.

Rotation

Related to this is what you could call rotation.

In markets, short-term traders tend to gravitate toward whatever has momentum. Over the past couple of years, different parts of the AI trade have taken turns leading as new bottlenecks emerge.

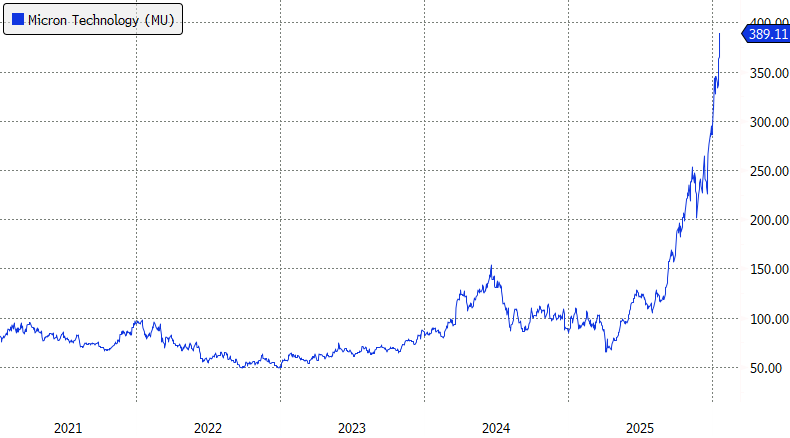

Right now, the money is flowing into memory stocks. If you look at a chart of Micron, it’s basically a straight line up.

Momentum and narrative matter a lot in short-term market moves. At the moment, both are supporting memory and, as a result, sucking some oxygen away from Nvidia.

Competition

The other, more important piece of the puzzle is competition.

Nvidia has been the biggest winner of the AI boom. It’s been charging very high prices and earning extremely high margins because its GPUs became the default infrastructure for training and running large AI models.

But a few months ago, perceptions started to shift.

Google unveiled Gemini 3, an AI model that was trained and runs on Google’s own custom chips, known as TPUs. Gemini 3 was widely viewed as state of the art, and it didn’t rely on Nvidia GPUs.

That changed the narrative. It introduced the idea that you can do cutting-edge AI without Nvidia.

There have even been rumblings that Google could eventually sell its TPUs to other companies rather than keeping them entirely in-house. If that were to happen, it would almost certainly eat into Nvidia’s market share at the margin.

There’s also a third, background concern that resurfaces periodically. It’s the idea that the AI boom is peaking, that demand may not materialize the way people expect, and that the massive infrastructure build-out might not end up being worth the cost.

That concern has been around for a while, and AI stocks tend to pull back whenever it resurfaces, then rebound when it fades. It’s not Nvidia-specific.

My Take

So those are the main factors weighing on Nvidia right now.

Personally, I think the memory bottleneck will fade over time. I’m also a believer that AI is here to stay. Hundreds of millions of people already use AI products, and that number is only going to grow.

The more consequential long-term question is competition.

Custom chips like TPUs could chip away at Nvidia’s market share over time. That said, Nvidia is still in a unique position. GPUs are far more flexible than TPUs, which matters a lot when model architectures are still evolving.

On top of that, Nvidia doesn’t just sell hardware. It sells a tightly integrated platform of software, tools, and networking that makes its chips usable at scale, and that’s something that’s much harder to replicate.

All of this is why I think sentiment toward Nvidia may be a bit too bearish right now.

Of course, the question is, what changes the narrative?

Here’s one possibility.

Most of today’s top models outside of Gemini, including those used by ChatGPT, Claude, and Grok, were trained on Nvidia’s older Hopper generation chips. Models trained on Nvidia’s newer Blackwell chips are expected to be released in the first half of this year.

If those models end up being clearly superior to what’s already out there, the narrative could shift back in Nvidia’s favor fairly quickly.

what do you mean 18X? It runs at about 46X rn. Are you referring to forward looking P/E?